Imaging System Simulation

WIP

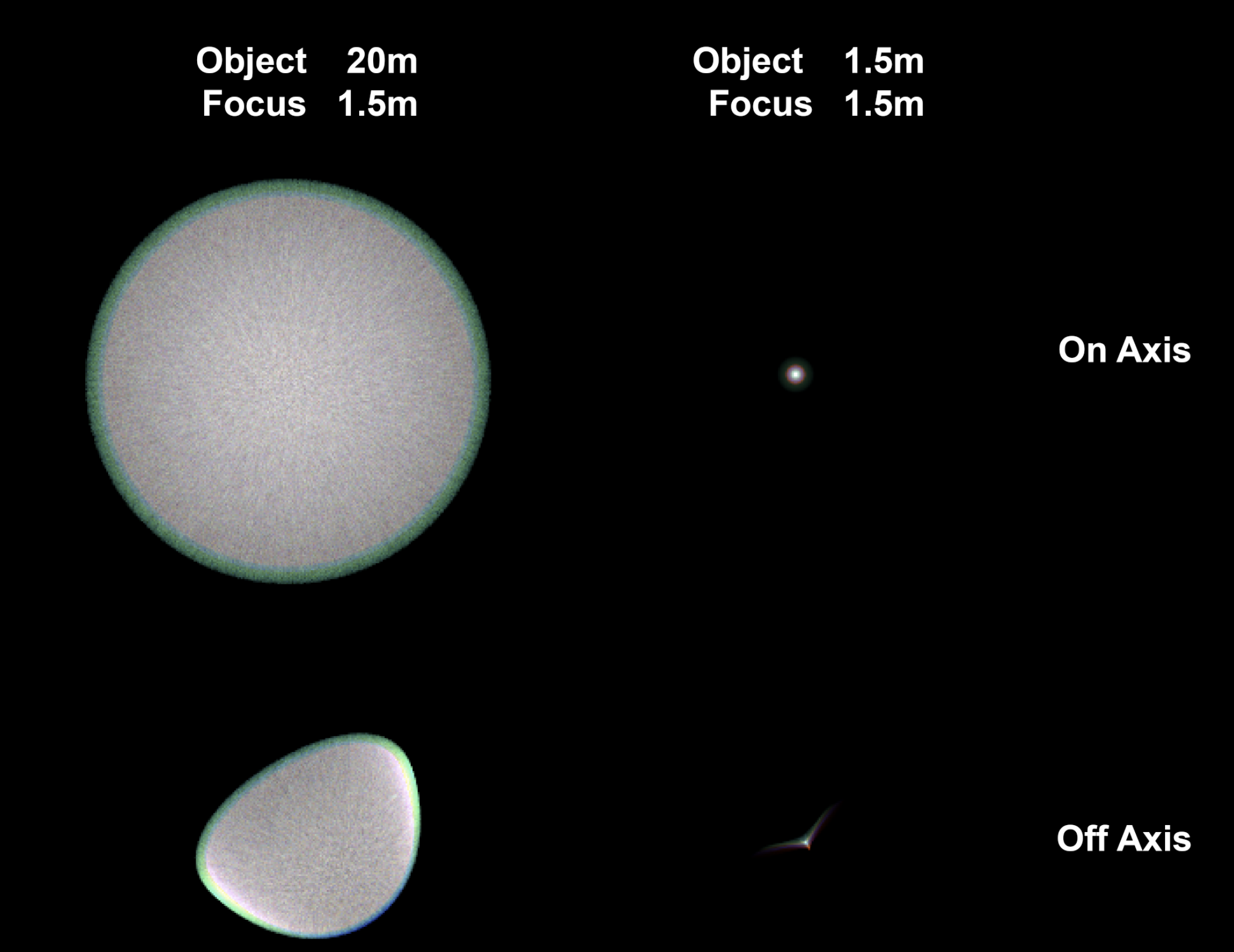

Having the imager as a separate class also allows easy control over the image resolution, combined with CUDA, the framework allows fast tracing over a high sample count. The neighboring images shows spot tracing on a 24MP imager. Note that due to the conservation of energy, the off-focus spots were overexposed for about 6 stops to have a comparable brightness.

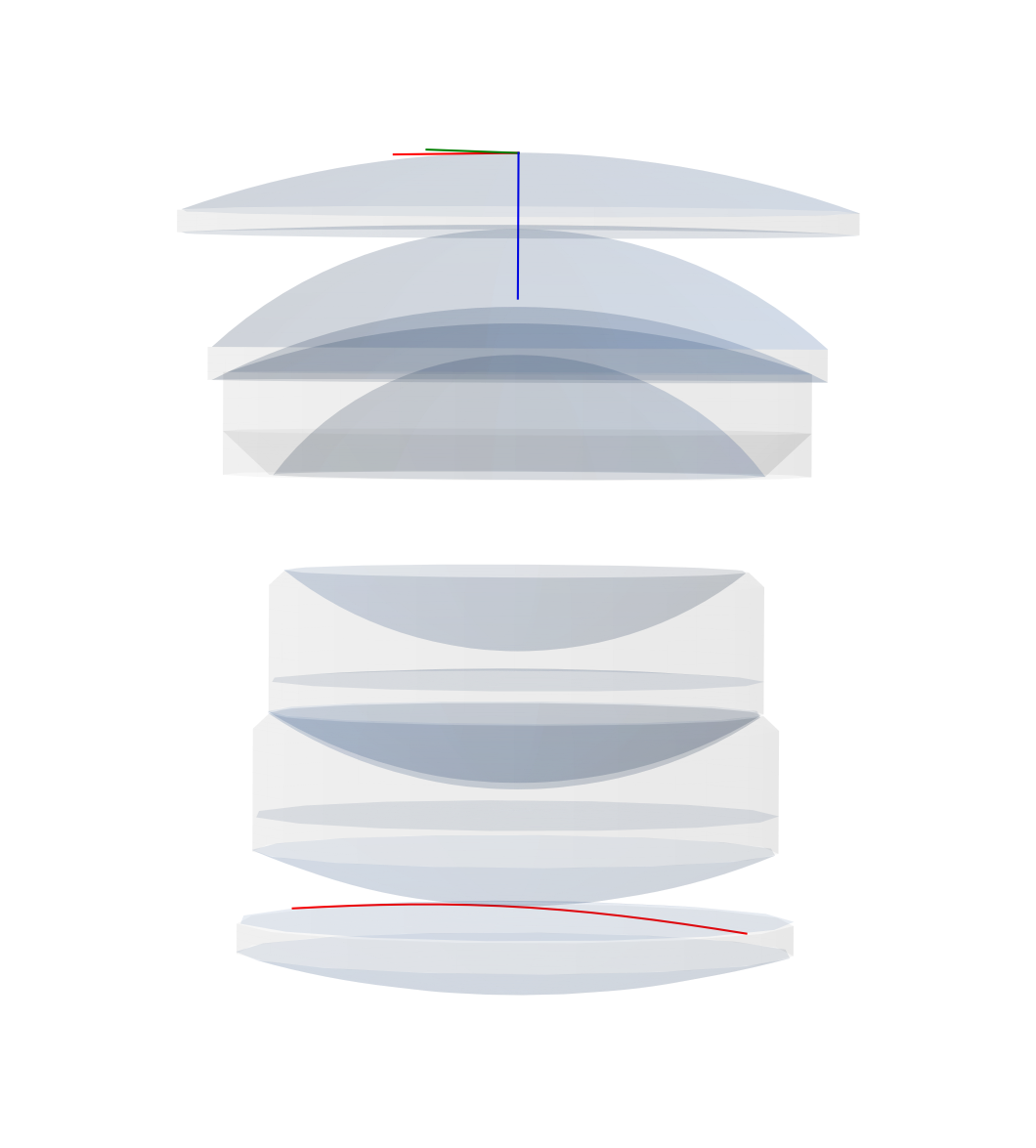

Similar to Zemax OpticStudio, the framework models the lens by defining different surfaces. It can also directly read from a ZMX file and construct the lens. In addition to the explicitly defined surfaces, the framework also uses the clear semi-diameter of each surface and constructs the boundary surfaces that encloses the surfaces into lenses (singlets, doublets, etc.).

The image on the left shows the surfaces constructed from the Canon EF 50mm f/1.2 L patent (JP 2007333790 Example 1). The red sag line indicates an aspheric surface.

Different from Zemax or CodeV, the framework also contains a class for imager, thus allowing results to be presented in the form of RGB images rather than wavelength distributions or frequency modulation (while the process is still being done entirely in wavelength and radiance, with consideration of polarization). This helps better visualize the result in a more intuitive way.

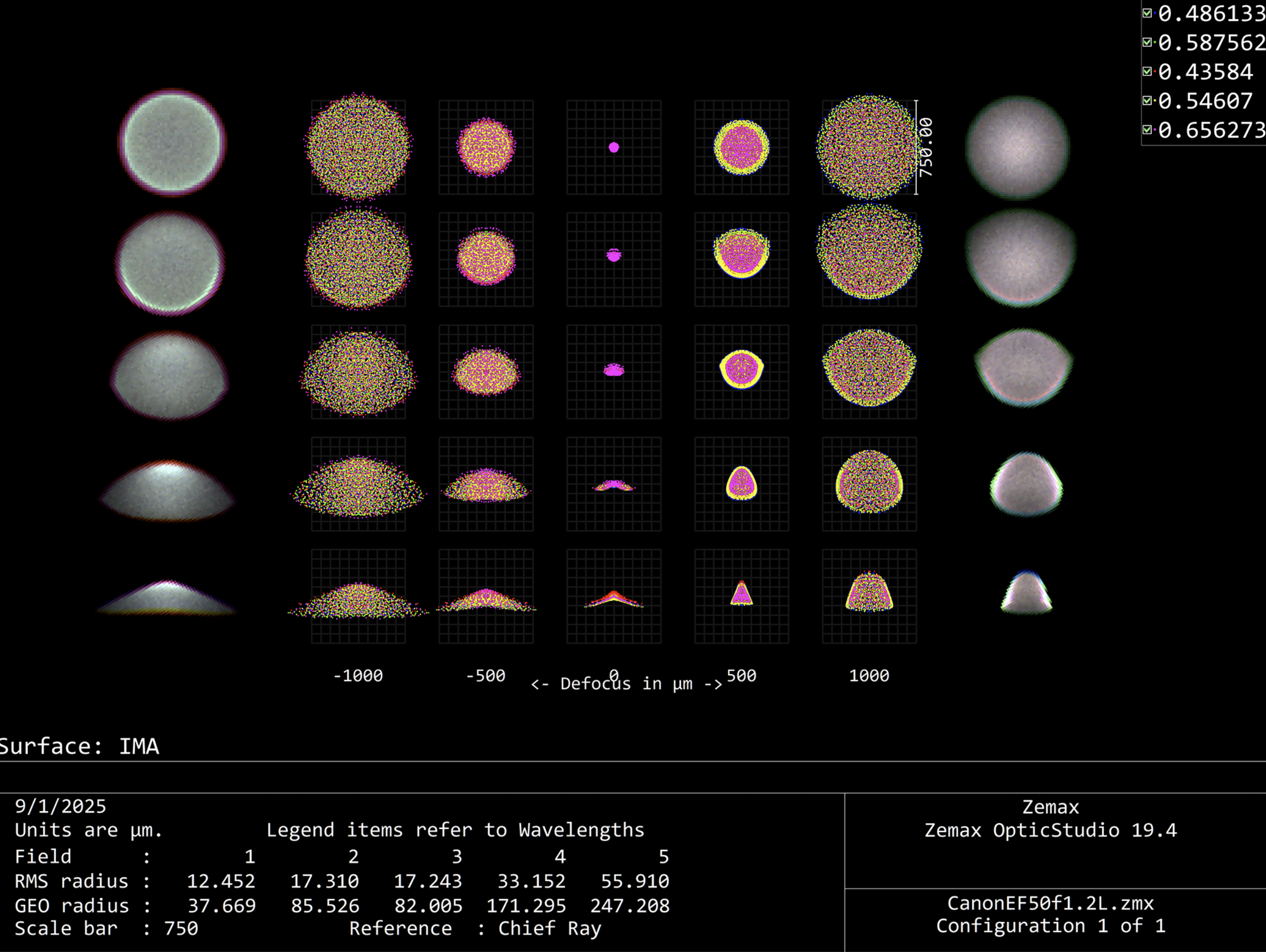

The image on the left shows a Zemax through focus spot diagram, with the simulated RGB results from this framework superimposed at the two sides.

Sequential imaging

The framework performs fast sequential imaging of the things in object space.

The GIF shows an ISO12233 chart shot through a Biotar 50mm f/1.4 lens. The chart is placed at different distance and scaled to fit the field of view, showing the distortion and focus breathing of the lens.

Manipulating the virtual lens is the same as using a real one, aperture can be set to a certain f-number like a real lens. User can either manually adjust the focus distance, or type in a distance and the system will auto-focus to that distance.

Non-sequential imaging

At the same time of performing sequential imaging, the framework also keep a a track of non-sequential rays.

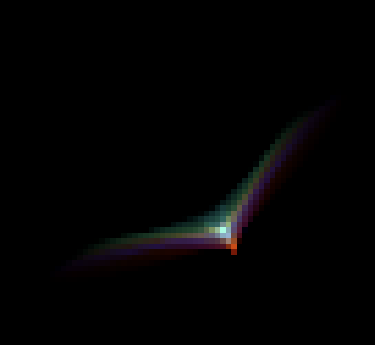

If needed, the non-sequential rays can be extracted and traced over to yield the result of flare and glares. The effect takes into consideration not only primary optical surfaces, but also lens edges, blackening, and the metal inner lens barrel.

The user can also apply anti-reflection coatings to specific surfaces, making flare and glare effects art-directable.

Aperture control

Historically, in most photographic applications, the lens is not used at its biggest aperture opening, however, many similar applications do not consider this situation and defaults to only simulate the lens wide open.

The framework, however, not only could simulate stopping down apertures, but it also supports simulating the shape created by aperture blades. By defining different aperture blades and constructing a diaphragm from them, the framework could accurately recreate any type of aperture and, consequently, any bokeh shape.

When given a aperture value, the framework compares the size of the theoretical entrance pupil size between the maximum aperture and the desired aperture, calculating an area ratio. Induvial blades are then rotated, the framework keeps updating the resulting area ratio and automatically adjust the degree of rotation to make sure it stops at the the exact aperture value. Additionally, the diaphragm is linked directly to the pupil. As such, when set to sample from pupil rather than 1st surface, no samples will be wasted due to reduced aperture size.

Modular design for both 2D/3D

The framework could work for both flat field (2D) input and 3D scene thanks to its ability of abstracting any input into emission sources using an modified version of Huygens’ principle.

The image below shows a scene rendered with a Canon EF 50mm f/1.2 L lens wide open, focusing at the car, which is located 5 yards away from the camera.

When used in the second imaging pipeline, the framework also allows world unit change at no cost. Whereas the same operation would have broken the software for a large 3D scene with billions of poly, dozens of characters with rigging, and countless special effects. The image below shows the same scene but scaled down 10 times, as a result, the focus distance became significantly shorter and the depth of field ended up as thin as a sheet of paper.

Imager emulation

Imager not only allows the framework to produce RGB images, it also supports other features such as testing the effect of UVIR glass, CFA and MLA, even film effects such as halation and spectral responses.

The image on the right shows the ISO 12233 chart shot through a Zeiss Hologon 15mm f/8 lens, but the thickness of the UVIR glass in front of the imager increases gradually. This is one of the many reasons that vintage glass seems to perform less desirably on modern digital camera compare to when they are on a film camera.

Full production IO support

The framework is designed specifically for the media production workflow, so that only a minimum amount of modification is needed to the pipeline after introducing this imaging system simulation step.

When used in 3D post compositing, the framework supports reading EXR files with multiple channels, such as alpha and z-depth. The framework can also output render files as EXR to preserve the high bitdepth info needed for further editing.

For 2D productions such as hand-drawn animation, the framework provides support to the normal 8-bit workflow but also allows extension to be made to compensate for the lack of malleable dynamic range.

Diffraction

Other features

Lens prescription data.

Automatic material search based on IOR and Abbe number at a given Fraunhofer line.

Geometric MTF.